Websites technical setup

It is a SEO practices excluding content optimization and link building. It’s used to improved website crawling in search engine and prepare website for on-page optimization and off-page optimization (link building).

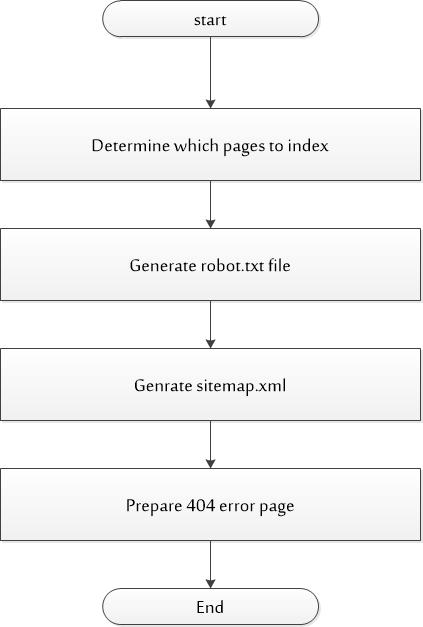

Indexing:

In indexing process, website owner determine which webpages should be read by SE and which shouldn’t as illustrated in Figure (1). Not all pages of website should be indexed. In websites under study the following pages will be excluded from indexing:

- Webpages which have not enough data like: blog category, tags and media files because short content have negative impact on websites ranking.

- Private Webpages such as: terms of Service, use of cookies and privacy policy.

- Webpages which have duplicate content.

- Webpages which have downloadable content such as pdf files.

Figure (1): websites indexing process

“all in one SEO pack”([1]) plugin is used to set the above setting for website indexing. In addition to plugin setting, any pages can be prevented from being indexing by using “no index” Meta tag to a page’s HTML code which can be done with the mentioned plugin options in each page.

After determine which pages to index, robot.txt file should be generated. It indicates what pages should be crawled by search engines and which shouldn’t. It provides instructions to search engines’ robots about what to crawl on website. “all in One SEO pack” plugin has the feature to generate the robot.txt file, it’s used to generate robots.txt files for website under study. The generated files can be found on https://example.com/robots.txt. Before a spider starts scanning, it checks the specifications from the robot.txt file.

Now sitemap should be generated for website, it will contain which pages to be crawled and visible in the search results, and organize them in the proper hierarchy. It was generated using “all in one SEO pack” plugin and it’s available on https://example.com/sitemap.xml. After that, sitemap should be available to Google by adding it to robots.txt file or directly submitting it to Search Console.

After all the above indexing steps, there is a challenge when a spider crawls website and comes to a broken page, it immediately leaves. But this is stop website indexing and it’s considered as indicator for poor website. So, 404 error page must be prepared and contains useful links to other pages to allow spiders continue crawling and indexing the site. In websites under study, custom 404 error pages are prepared and have connection to website home page and contact page.